Last Friday I hacked together an experimental on-line recommender in PHP to evaluate time spent on computation vs size of datasets. Here are some preliminary (discouraging) results using a local memcache setup and the following configuration:

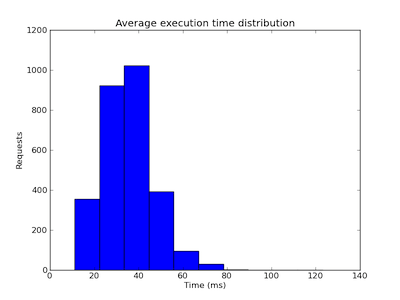

Users in memory: 500, Concurrent clients: 10, Ranked list length1: 100, Item vector size: 20

The script runs for 10 seconds during which time each client spams recommendation requests. Each request is independent of each other and are thus treated separately.

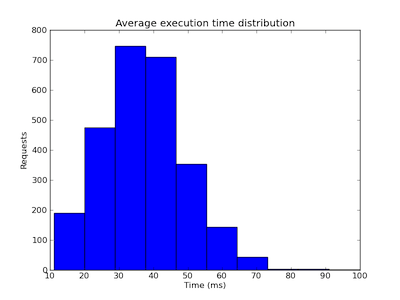

Increasing the number of users to 5000 with the other variables intact yields the following results:

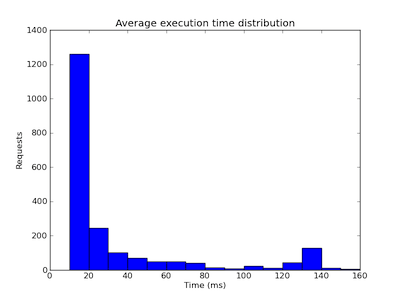

This figure shows a similar distribution to 500 users, hence, it suggests we are not capped by the number of users in memory. The total throughput is around 2700 requests. Next I decrease the ranked list size stored in memory. The average latency remains the same, however, total throughput increases to roughly 6000 requests.

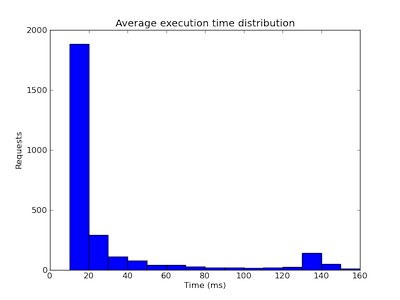

And with shorter preference vectors (set to 10) but ranked list length back to 100, the throughput is around 4000 requests.

I’m unsure why there is a significant portion of requests taking about 120-140 ms.

In summary, preference factors and ranked list length becomes important configuration variables for on-line computation. Much can, and needs to, be done to further increase the performance, for example, how the selection of the top K recommendations. For a production system we must get the majority of all requests down to, or below, 100 ms. This should include also updating the context vector.

Thoughts on PHP

PHP4 was still the mainstream version when I last hacked stuff using PHP. And obviously a lot have changed since. For starters, PHP has a class system! There are also array functions to do operations equivalent to map and reduce (maybe they were there earlier too, but I didn’t know). My impression so far is that it is pretty easy to get started with (hey, nothing to compile right), but it feels rather bloated.

PHP is extensive. There seems to be functions for almost everything. Thus having a browser and php.net open is essential. On the other hand, keeping the code easy to understand felt like a challenge. With experience this ought to be less of an issue. For example, using arrays as both dictionaries, lists, and tuples quickly made me realise I was reading my own code over and over again to see what was being passed around. Thank you Netbeans for auto-completing my very long variable and function names. :)

Lastly, I found myself looking for threading capabilities to run multiple clients concurrently. Turns out there is no such thing in PHP. Instead I ended up writing a python script to wrap my php script in. Epic fail. Naturally, PHP doesn’t come from a multi-threaded usage, it relies on whatever webserver to handle each request individually and thereby making it multi-threaded.

1 This is the result of the partial model generated off-line and it is unique for every user.